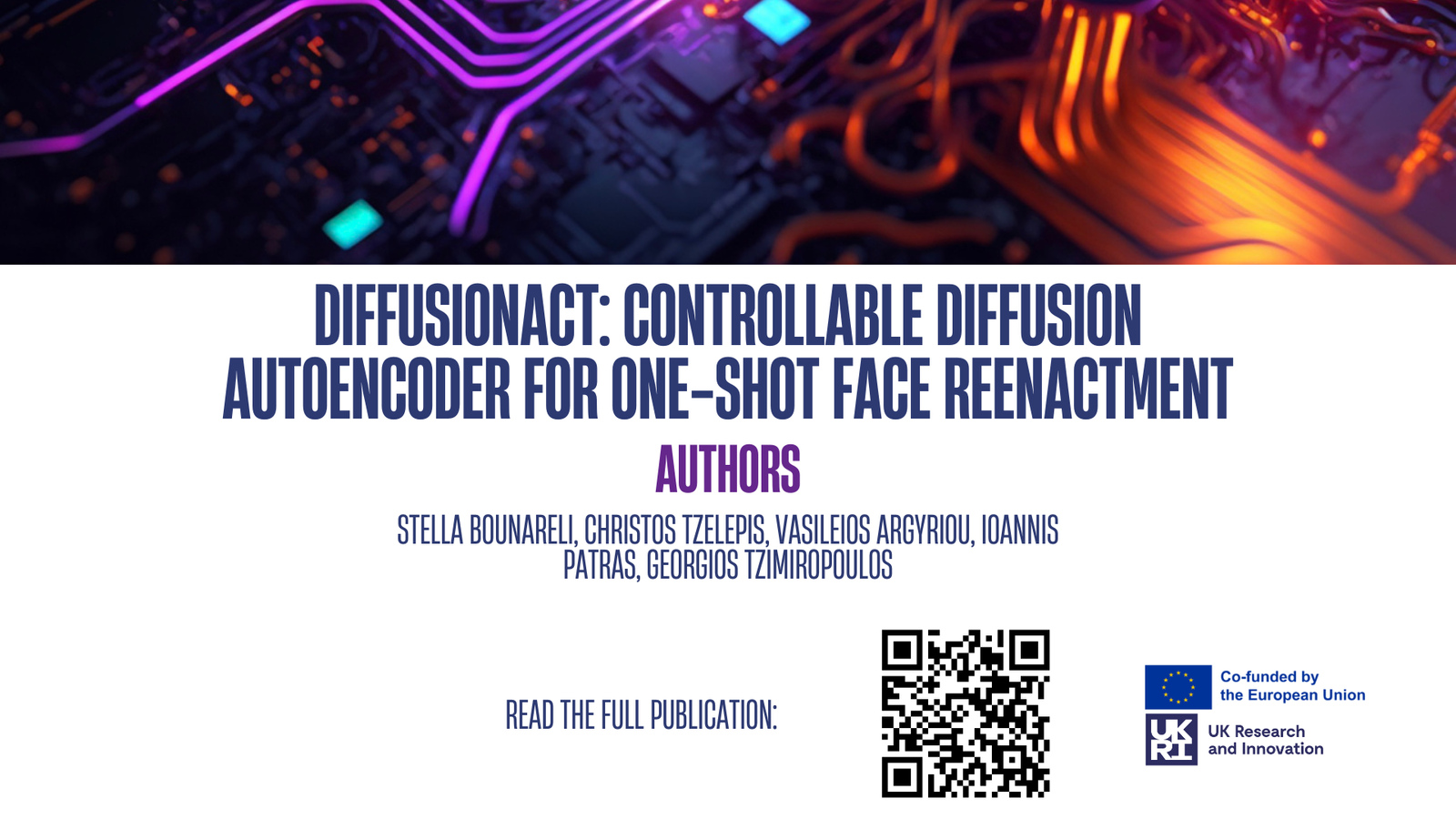

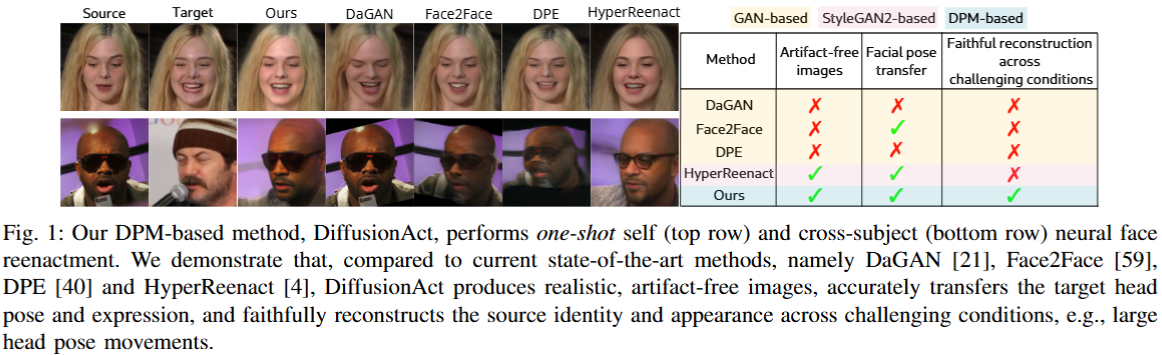

We are excited to share that the RAIDO Project’s latest paper, “DiffusionAct: Controllable Diffusion Autoencoder for One-shot Face Reenactment,” is now live on IEEE Xplore following its presentation at FG2025.

DiffusionAct moves beyond the limitations of traditional GAN-based methods by using Diffusion Probabilistic Models (DPMs) to produce high-fidelity, artifact-free face reenactments. Unlike previous models, DiffusionAct requires only a single source image (one-shot) and allows for precise control over expressions while perfectly preserving the subject’s identity.

Congratulations to our partners at Kingston University and Queen Mary University of London for this achievement.

Find us on Social Media