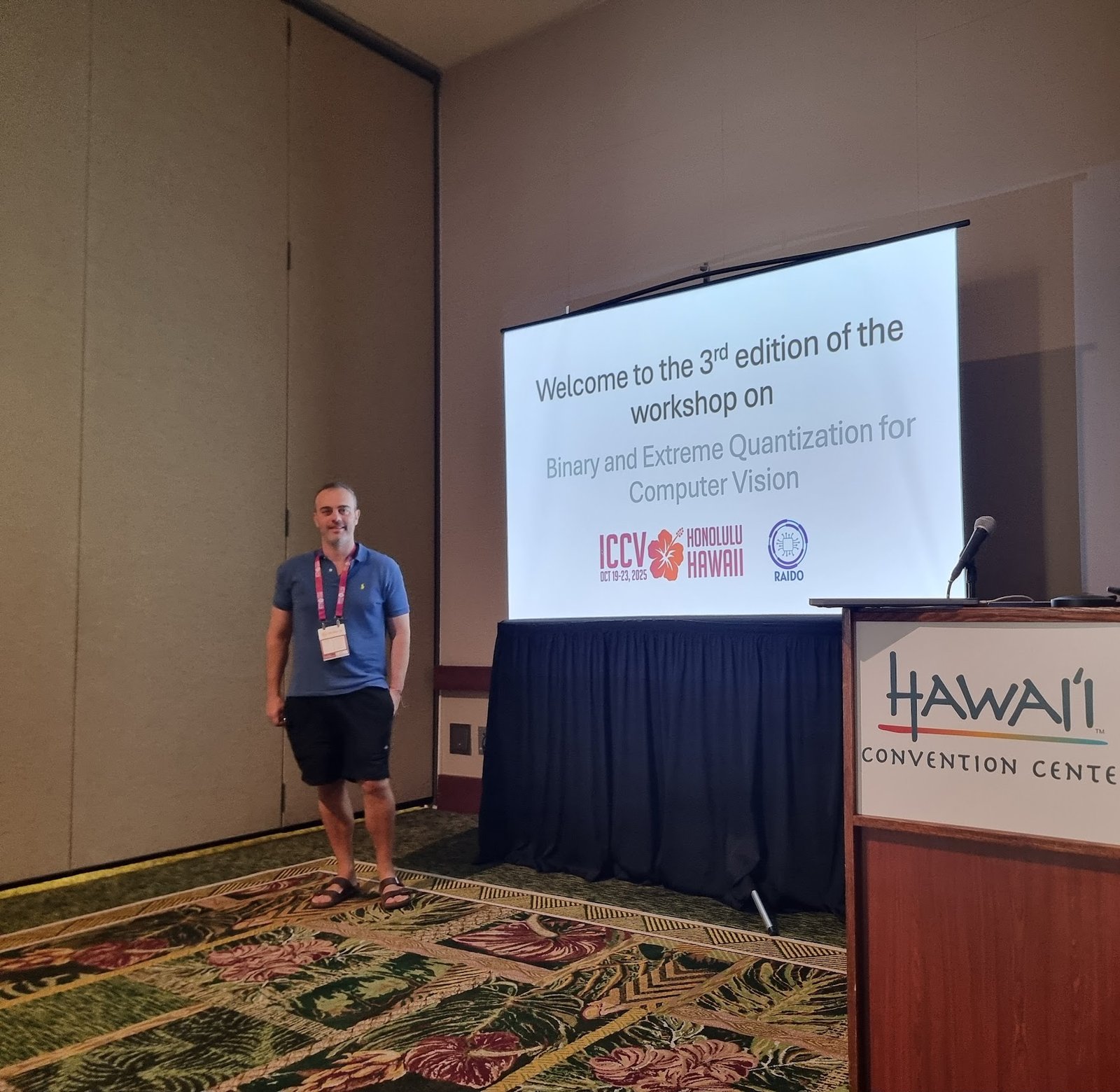

RAIDO is proud to announce its successful participation in and support of the “Binary and Extreme Quantization for Computer Vision” Workshop at the International Conference on Computer Vision (ICCV) 2025. The event took place in Honolulu on October 20, 2025.

Fostering Innovation in Efficient AI

RAIDO helped bring together leading academics and industry experts to address the critical challenge of deploying high-performance computer vision models on resource-constrained devices.

We were delighted to support the event alongside two esteemed colleagues from our partner institution, Queen Mary University of London (QMUL). Their expertise was instrumental in shaping a compelling program. For more details on the workshop, you can visit the official page: https://binarynetworks.io/.

Success and Value: Driving Efficiency in Computer Vision

The workshop was a resounding success, achieving high attendance and sparking dynamic, high-quality discussions centered on the most challenging and relevant areas of efficient AI.

A short summary:

- Keynote Highlights: The schedule featured cutting-edge invited talks, including Amir Gholami’s presentation on XQuant, focusing on breaking the “memory wall” for LLM Inference using KV Cache Rematerialization. This showcased real breakthroughs in deploying memory-efficient large models.

- Breadth of Research: The accepted papers and presentations covered a high-impact range of topics crucial for model compactness and efficiency, including low-bit quantization for Large Vision-Language Models (LVLMs), Generative Models (e.g., Diffusion, VAR-Q), and specialized methods for Vision Transformers (e.g., mitigating GELU quantization errors).

- Technical Depth: The discussions directly tackled the central challenge of maintaining accuracy in low-bit quantized networks compared to their full-precision counterparts, validating the feasibility of advanced on-device AI.

Value to Attendees:

- Practical and Theoretical Solutions: Attendees received direct, actionable insights into advanced techniques for achieving model compactness, computational efficiency, and low power consumption—essential for pervasive deployment on resource-constrained devices.

- Future-Proofing Knowledge: The workshop provided comprehensive coverage of emerging areas, such as hardware implementation, Post-Training Quantization (PTQ), and new methodologies combining quantization with other efficient techniques (like pruning or dynamic modeling), offering a roadmap for next-generation efficient deep learning systems.

- Addressing Critical Bottlenecks: Researchers gained critical knowledge on tackling the memory and computation bottlenecks that limit large model deployment, particularly through novel approaches like quantized KV caching and new architectures like Multi-Boolean Architectures.

Key Takeaways for RAIDO

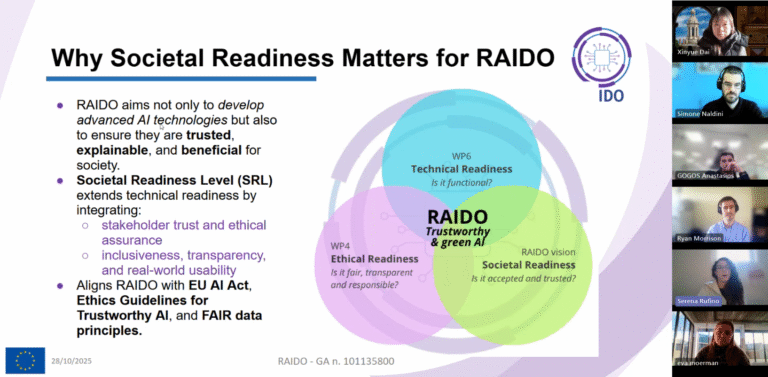

Our involvement in supporting and attending the workshop provided valuable insights that will directly inform RAIDO’s strategic direction:

- Cutting-Edge Techniques: We gained a deeper understanding of the most recent advancements in extreme quantization, particularly methods showing superior performance-vs-efficiency trade-offs. This knowledge will be immediately integrated into our R&D roadmap to enhance the energy efficiency of our deployed systems.

- Validation of Approach: The community’s strong focus on hardware-aware design reaffirmed the value of RAIDO’s current trajectory in developing AI solutions optimized for low-power computing platforms.

- New Collaborations: The event opened doors to promising new academic and industrial partnerships, which will strengthen our ability to accelerate the development of deployable, sustainable AI.

We are thrilled to have played a part in this crucial scientific exchange and look forward to building on these learnings.